Current and Future Use of Machine and Deep Learning Systems

Ji-Peng Olivia Li, MA(Cantab), MBBS, FRCOphth; Jodhbir S. Mehta, BSc (Hons), MBBS, PhD, FRCOphth, FRCS(Ed), FAMS; and Daniel Shu-Wei Ting, MBBS (Hons), BSciMed, FRCOphth, MMed(Ophth), FAMS, PhD(UWA)

Interest in AI is exploding. The science of developing computer systems to perform tasks normally requiring human intelligence is a broad field that encompasses visual perception, speech recognition, and decision-making. The areas where AI is having the greatest impact on health care include machine learning and deep learning. Machine learning is based upon statistical methods; algorithms are used to process vast amounts of labeled data to find patterns and learn from experience without being specifically programmed to do so. Deep learning is a subfield of machine learning; the multilayered processing structure mimics the human brain with convolutional neural networks, which are capable of learning from unlabelled data in a supervised or an unsupervised manner.

Ophthalmology, radiology, and dermatology are at the forefront of image-based diagnosis, which is particularly suited to machine learning techniques. Within ophthalmology, algorithms for diagnosing retinal disease from fundus photographs and, more recently, OCT images are by far the most advanced examples of this digital revolution. The FDA has approved the use of IDx-DR (IDx), an algorithm used to screen patients for diabetic retinopathy, and the imaging and informatics in retinopathy of prematurity system (i-ROP DL, i-ROP consortium) received FDA breakthrough device status for diagnosing retinopathy of prematurity.

A wealth of data are readily available through retinal screening programs for diabetic retinopathy and retinopathy of prematurity. In contrast, challenges related to the quality and quantity of baseline data have slowed advances in the use of AI for the anterior segment. Additional obstacles relate to the consistency and reproducibility of imaging techniques and the accessibility of raw topographic and tomographic images. As technologies improve and their availability becomes more widespread, however, researchers will become better able to elucidate the potential benefits of AI for the anterior segment. Our article and those that follow it provide examples of this work.

CATARACT

Home-monitoring techniques using smartphones are permitting the establishment of remote cataract screening services.1 AI platforms using slit-lamp images have been shown to vastly improve the productivity of these screening services. More widespread adoption of these platforms could have a major impact on patient care in regions where ophthalmology services are limited.

Machine learning can also improve the refractive accuracy of cataract surgery. Algorithms can be refined through training involving comparisons of pre- and postoperative anterior segment OCT images to increase the accuracy with which the effective lens position (ELP) is predicted (see “The Impact of Big Data on IOL Power Calculation,").

KERATOCONUS

Devices such as the Pentacam (Oculus Optikgeräte) use indices that help clinicians estimate the risk of disease progression. Efforts are underway to incorporate more data into algorithms and to use machine learning techniques to determine the likelihood of disease progression. The Pentacam, Casia SS-1000 OCT (Tomey), Orbscan (Bausch + Lomb), and Corvis ST (Oculus Optikgeräte) are some of the devices being used to collect data to train, test, and validate these algorithms.

Machine learning also holds promise for improving the early diagnosis of subclinical keratoconus. With monitoring, the database of topographic and other measurements will grow and provide the information required by machine learning algorithms to make accurate diagnoses. In time, AI may be able to predict the extent of disease progression and the response to CXL.

REFRACTIVE SURGERY

The development of ectasia after laser refractive surgery is a dreaded complication, explaining the great interest in developing effective methods of screening patients for undetected underlying ectatic diseases. Several researchers are studying the use of machine learning techniques and modern topographers for this purpose (see “Screening for Ectatic Corneal Disease With Multimodal Imaging,").

Machine learning can also be used to enhance the patient experience by increasing the accuracy with which postoperative outcomes are predicted. At present, accuracy is lower for patients with high myopia and astigmatism, but it may improve over time as more data become available for training algorithms.

INFECTIOUS KERATITIS

Infectious keratitis is a major cause of corneal opacification globally, and the causative organisms vary geographically. The diagnostic ability of existing methods such as corneal scrapes with microscopy, polymerase chain reaction, culture, staining, and confocal microscopy is unsatisfactory in real-world clinical settings.

AI may prove helpful for diagnosis. Standard photographs and anterior segment OCT scans are being used to train algorithms with some success. AI may assist the diagnosis of fungal and Acanthamoeba keratitis. Being able to use standard photographs to diagnose corneal ulcers would have a major impact on the provision of emergency eye services, and it could facilitate the judicial use of antimicrobial treatments and reduce delays in the initiation of effective therapy.

Photography-based diagnosis with AI would be beneficial in areas with limited resources and ophthalmologists. It also has a role in the provision of remote telemedicine services, the utility of which became more apparent than ever during the current pandemic (see “Embracing Teleophthalmology,").

PTERYGIUM

Deep learning has also been used in the remote diagnosis of pterygium. These algorithms have been combined with telemedicine services and platforms to counsel patients on treatment options.2

Advances in this use of AI may be especially beneficial in areas served by few ophthalmologists.

SPECULAR MICROSCOPY

Automated segmentation is being investigated, with some success in specular microscopy for the identification and assessment of corneal endothelial cells. Currently, endothelial cell counts and the evaluation of variations in cell size and hexagonality rely on inbuilt software that defines cells in an automated fashion. Results obtained with this software often correspond poorly with those obtained through manual segmentation, a process that simplifies images by labeling meaningful boundaries. Segmentation of the corneal endothelium becomes more challenging when image quality is poor and the endothelium is diseased, yet clear information on the state of the endothelium is particularly important in these situations.

Deep learning has been used to identify areas where cells can be segmented and evaluated as reliably as with human assessors and better than with some existing inbuilt software models. Further validation in real-world models of corneal disease states after graft surgery could lead to the wider adoption of endothelial assessment in routine practice because of the ease and accuracy of analysis.

GLAUCOMA

Early AI studies in glaucoma relied on the interpretation of fundus photographs and disc images. Since then, the advent of anterior segment OCT has greatly improved clinicians’ understanding of angle anatomy. Both machine learning and deep learning techniques have been used to diagnose angle-closure glaucoma, including the classification of its subtypes. Real-world validation of research results is required.

The influence of race and ethnicity on patients’ risk of developing angle-closure glaucoma must be taken into account when evaluating algorithms trained on specific populations and particularly if the reporting of race and ethnicity is lacking. Because machine learning is capable of identifying previously unknown associations, the datasets used for training must be carefully designed and selected to avoid potential bias.

SYSTEMIC DISEASE

AI has the potential to facilitate the diagnosis and monitoring of systemic diseases using slit-lamp photographs. The evaluation of conjunctival photographs has been shown to be an accurate, noninvasive method of diagnosing anemia. Recently, investigators have demonstrated that the conjunctiva, sclera, and iris can be used to identify patients with hepatobiliary disease, particularly liver cancer and cirrhosis.3

CONCLUSION

The potential for AI to uncover previously unknown associations is exciting, as is the possibility that deep learning systems will be able to make more accurate diagnoses than the clinician in certain instances.

1. Wu X, Huang Y, Liu Z, et al. Universal artificial intelligence platform for collaborative management of cataracts. Br J Ophthalmol. 2019;103(11):1553-1560.

2. Zhang K, Liu X, Liu F, et al. An interpretable and expandable deep learning diagnostic system for multiple ocular diseases: qualitative study. J Med Internet Research. 2018;20(11):e11144.

3. Xiao W, Huang X, Wang JH, et al. Screening and identifying hepatobiliary diseases through deep learning using ocular images: a prospective, multicentre study. Lancet Digit Health. 2021;3(2):e88-e97.

Advanced Vision Analyzer

Ashvin Agarwal, MS

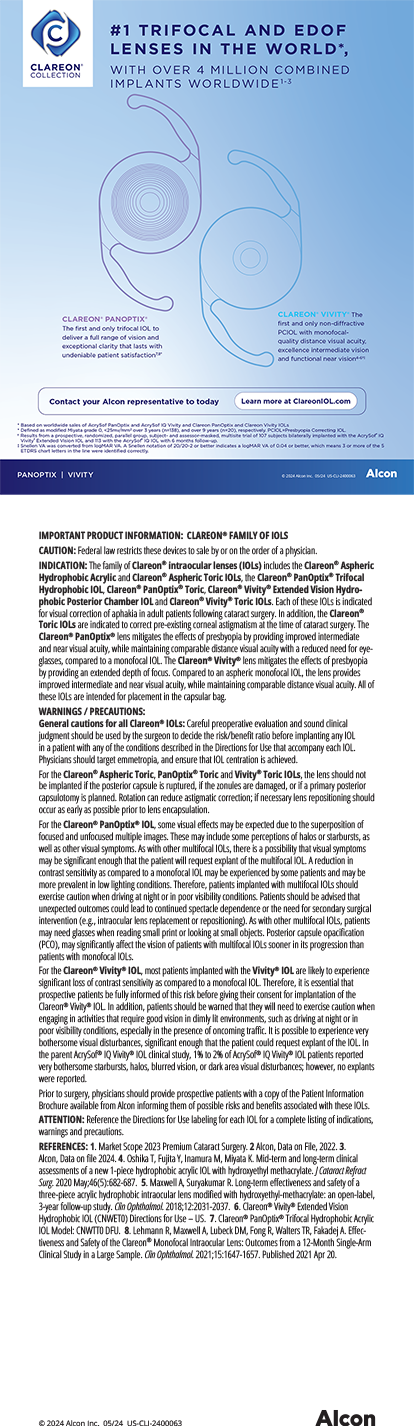

Automated perimetry is an essential tool for documenting visual field defects that may be glaucomatous or neurological in origin. Standard automated perimetry (SAP) is a well-accepted test that measures the threshold of each point in the visual field, but it traditionally relies on a high-cost tabletop system with limited mobility. The Advanced Vision Analyzer (AVA, Elisar Vision Technology), which meets the global standards for SAP devices, could increase utility across the globe.

HARDWARE AND SOFTWARE

The AVA is a small battery-powered autoperimeter that can perform visual field threshold measurements. The portable unit does not require a separate dark room for testing. Nor is it necessary to occlude the patient’s fellow eye.

The AVA consists of a head-mounted device similar to a virtual reality headset, a handheld patient response button, and an Android tablet that serves as a controller to perform, record, and analyze the test program (Figure 1). The lightweight head-mounted device provides flexibility in patient positioning; it allows patients to take the test while reclined, which can be particularly helpful for elderly, pediatric, pregnant, and physically disabled patients.

Figure 1. Visual field testing is performed with the AVA.

Clinical data are encrypted and therefore secure. Health care providers may retrieve previous test results from cloud storage by entering details about the patient such as their name, phone number, and email address. Software on the tablet regularly updates the data on the cloud for each patient. The tablet can connect wirelessly to a printer, or reports may be delivered by email.

TESTING

The AVA offers white-on-white perimetry with a stimulus intensity ranging from 0 to 40 dB. The test is performed with a Goldman III stimulus size and either a 24-2 or a 30-2 program. The available testing strategies are suprathreshold, full-threshold, Elisar Zest, and Elisar Fast. Fixation monitoring is done with Heijl-Krakau tests and an integral eye tracker that provides a live feed of the tested eye on the test controller; this advanced infrared tracking technology allows patient monitoring and minimizes fixation loss. The unit’s interpupillary distance adjustment capability helps to ensure that field measurements are accurate.

The printed test report (Figure 2) consists of four parts: (1) patient information, test type, and test duration; (2) absolute threshold values and gray-scale pattern; (3) a gradient plot based on the threshold results obtained; and (4) a readout of reliability parameters such as false positive, false negative, and fixation loss.

Figure 2. Test report of a case demonstrating GIs, visual field parameters, and the threshold gray-scale chart.

Figures 1 and 2 courtesy of Ashvin Agarwal, MS

The report includes defect significance and global indices (GIs) that are compared to a normative database built specifically for the AVA. The GIs summarize the sensitivity values produced by the test, provide an overview of the visual field, and aid a sequential comparison of test results for an individual eye at follow-up visits. The data that are provided in detail are total deviation, pattern deviation, and glaucoma hemifield test. As with SAP, the AVA’s glaucoma hemifield test permits a comparison of visual field defects across the horizontal axis, with results categorized as within normal limits, borderline, or outside normal limits.

CONCLUSION

Studies comparing the AVA with a conventional autoperimeter demonstrated an equivalent ability to detect all forms of visual field defects. The report generated by the AVA meets international standards and adheres to the format to which ophthalmologists are accustomed.

Clinical trials have established the AVA’s utility for detecting and analyzing visual field defects in patients of all ages and health statuses. And during the COVID-19 pandemic, the AVA is an alternative for patients whose health could be threatened by visiting a central testing site.

Screening for Ectatic Corneal Disease With Multimodal Imaging

Renato Ambrósio Jr, MD, PhD, and Riccardo Vinciguerra, MD

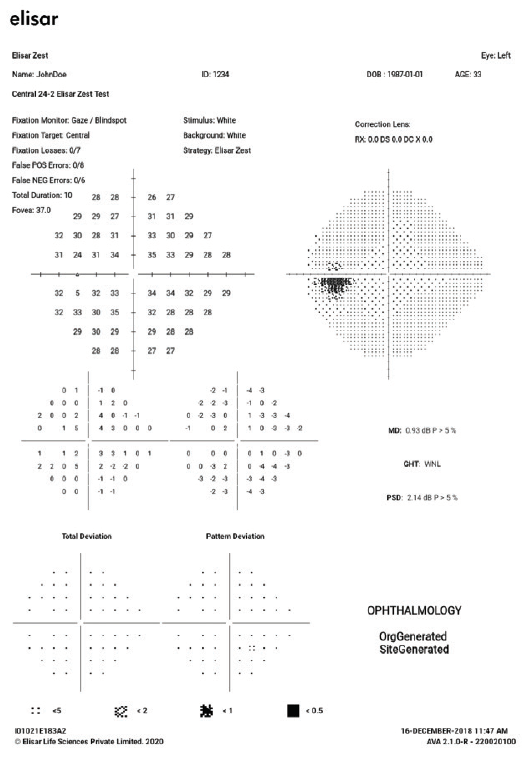

Screening for the risk of ectatic progression and corneal abnormalities is a crucial part of the preoperative planning process for refractive surgery. Developments in multimodal imaging tools from Placido disc–based topography to Scheimpflug 3D tomography, high-frequency ultrasound, and OCT have increased our ability to detect even mild disease, to select appropriate candidates for laser and lens-based vision correction, and to customize the surgical procedure.1

Besides the characterization of corneal shape, the clinical assessment of biomechanical properties has gained momentum in screening for ectatic progression. We now know that the pathophysiology of ectasia is related to biomechanical failure, which has helped us to evolve from the diagnosis of mild (subclinical) keratoconus toward the characterization of the susceptibility for ectatic progression after laser vision correction.2

QUICK BREAKDOWN

Placido-based topography characterizes the front surface of the cornea plus the tear film. Tomography characterizes the anterior segment and includes the details of the front and back surface elevation and thickness mapping. High-frequency ultrasound and OCT provide a layered (segmental) tomography, enabling epithelial thickness mapping. All of these devices and functions offer practitioners relevant information so that enhanced characterization of the cornea may affect the clinical decision-making process.

The Corvis Biomechanical Index (CBI), a combined index based on corneal biomechanical parameters, was the first step to improving the detection of corneal ectasia. The CBI has been shown to be highly sensitive and specific to diagnose keratoconus.3

Several years ago, we collaborated with Ahmed Enshiekh, PhD, to devise a biomechanically corrected measurement of IOP.4-6 Further work with Cynthia Roberts, PhD3,7,8 aimed to develop the Ambrósio, Roberts, and Vinciguerra (ARV) display (Figure 3) that integrates the Pentacam and Corvis ST (Oculus Optikgërate). The ARV uses AI to integrate biomechanical and tomographic assessments to enhance the accuracy of ectasia detection.9-12 The tomography and biomechanical index (TBI) was developed with AI using the random forest approach. The TBI, available in the ARV display, demonstrated higher sensitivity in the eyes with normal topography from patients presenting with very asymmetric ectasia, meaning it can help to detect even the most subtle disease.

Figure 3. ARV display of a 30-year-old patient with unremarkable biomicroscopy, a best-corrected distance visual acuity of 20/15, and clinical ectasia in the fellow eye. Note the normal symmetrical bow-tie with mild with-the-rule astigmatism and the relatively thin cornea. The CBI of 0.9 and TBI of 0.5 help to identify the susceptibility for ectasia or form fruste keratoconus in this eye.

Courtesy of Renato Ambrósio Jr, MD, PhD

CONCLUSION

There is a continuous evolution in diagnostic technologies. In recent work by the Brazilian Artificial Intelligence Networking in Medicine, optimized AI has enhanced the accuracy of the TBI using a larger database from an international multicenter group. The evaluation of corneal layers with tomography should provide parameters for integration into AI. Further developments in the early detection of ectatic corneal disease are expected to include genetics and molecular biology.

1. Ambrósio R Jr. Multimodal imaging for refractive surgery: Quo vadis? Indian J Ophthalmol. 2020;68:2647-2649.

2. Ambrosio R Jr. Post-LASIK ectasia: twenty years of a conundrum. Semin Ophthalmol. 2019;34:66-68.

3. Vinciguerra R, Ambrosio R Jr, Elsheikh A, et al. Detection of keratoconus with a new biomechanicalindex. J Refract Surg. 2016;32:803-810.

4. Chen KJ, Eliasy A, Vinciguerra R, et al. Development and validation of a new intraocular pressure estimate for patients with soft corneas. J Cataract Refract Surg. 2019;45:1316-1323.

5. Eliasy A, Chen KJ, Vinciguerra R, et al. Ex-vivo experimental validation of biomechanically-corrected intraocular pressure measurements on human eyes using the CorVis ST. Exp Eye Res. 2018;175:98-102.

6. Lee H, Roberts CJ, Kim TI, Ambrosio R Jr, Elsheikh A, Yong Kang DS. Changes in biomechanically corrected intraocular pressure and dynamic corneal response parameters before and after transepithelial photorefractive keratectomy and femtosecond laser-assisted laser in situ keratomileusis. J Cataract Refract Surg. 2017;43:1495-1503.

7. Roberts CJ, Mahmoud AM, Bons JP, et al. Introduction of two novel stiffness parameters and interpretation of air puff-induced biomechanical deformation parameters with a dynamic Scheimpflug analyzer. J Refract Surg. 2017;33:266-273.

8. Vinciguerra R, Ambrosio R Jr, Roberts CJ, Azzolini C, Vinciguerra P. Biomechanical characterization of subclinical keratoconus without topographic or tomographic abnormalities. J Refract Surg. 2017;33:399-407.

9. Ambrosio R Jr, Lopes BT, Faria-Correia F, et al. Integration of Scheimpflug-based corneal tomography and biomechanical assessments for enhancing ectasia detection. J Refract Surg. 2017;33:434-443.

10. Koh S, Inoue R, Ambrosio R Jr, Maeda N, Miki A, Nishida K. Correlation between corneal biomechanical indices and the severity of keratoconus. Cornea. 2020;39:215-221.

11. Esporcatte LPG, Salomao MQ, Lopes BT, et al. Biomechanical diagnostics of the cornea. Eye Vis (Lond). 2020;7:9.

12. Salomao MQ, Hofling-Lima AL, Gomes Esporcatte LP, et al. Ectatic diseases. Exp Eye Res. 2021;202:108347.

AI and Robotics: The Future of Ophthalmology

Michael Assouline, MD, PhD

According to the World Health Organization, 2.2 billion people worldwide are visually impaired or blind, and almost half of these cases are preventable. In the United States alone, the economic burden of conditions that affect vision is an estimated $153 billion.1 Furthermore, the current myopia and diabetes pandemics will dramatically increase the demand for eye care in the near future. Will we be able to meet the increasing needs of more patients? With only about 220,000 ophthalmologists and 300,000 optometrists practicing worldwide, access to eye care is precarious. According to projections, we calculate there will be a shortage of around 1 million eye care professionals by 2025 to simply meet the World Health Organization’s guidelines for eye examinations (data on file with Mikajaki).2

BARRIERS TO EFFECTIVE CARE

The industrial revolution and the advances in information technology are slower to affect medicine than other professions. Ophthalmology has remained, since its ancient origins, an artisanal profession. Lack of access to care and the often brief interactions between patients and their doctors may not build an optimal foundation for the prevention of vision loss. Diagnostic processes are often selective and less than comprehensive, potentially biasing diagnosis and management.

Additionally, despite recent developments in ophthalmology, diagnostic procedures are quite tedious for both patients and professionals, and their cost-effectiveness remains limited. As a result, difficulties in accessing care and human shortcomings are among the most significant causes of preventable vision loss.

POTENTIAL SOLUTIONS

The world today is brimming with opportunities to accelerate technological innovation with AI and robotics.3 After 30 years of working with research and development teams on surgical implants and lasers, I am still fascinated by the progress made in these fields through individual disruptive intelligence. Today, AI methods—detached from all human inference and the contingencies of traditional statistical sampling—are proving capable of handling big data to make data sciences more accessible, transparent, and effective for ophthalmology. Automation, which has established itself in the production of goods and services, should also help optimize the efficiency of medical practices. In the future, we may see the use of smart robots for patient interaction.

In the specific fields of cataract and refractive surgery, most diagnostic features may be processed more adequately with data sciences than human judgment. These features include the measurement of refraction, the evaluation of cataracts, and the detection of keratoconus as well as surgical planning decisions such as LASIK versus surface ablation, photoablation profiles, and customized IOL calculations.

NEW VENTURES

In 2018, my colleagues and I set out to reengineer the diagnostic approach we use in ophthalmology by integrating recent developments in virtual agents, robotics, and AI into a streamlined process. The goal is to generate the medical intelligence necessary for the diagnosis and management of eye conditions in a fully automated way. This project combines three complementary elements under the umbrella of the Ariane Eye-Suite (Figure 4).

Figure 4. Elements of the Ariane Eye-Suite.

Courtesy of Michael Assouline, MD, PhD

Element No. 1: Ariane-InSight. This multilingual robotic chatbot with online accessibility uses the algorithmic implementation of a decision matrix relating 166 eye conditions to more than 600 subjective diagnostic criteria (ie, symptoms or risk factors). The probability of common or rare diagnoses is derived from an optimized dynamic selection of questions based on previous answers.

The Ariane-InSight conversational agent is currently being tested by clinical experts. It is already capable of diagnosing 60 eye conditions from 120 criteria using, on average, fewer than a dozen steps. The confidence interval of these diagnoses ranges from 57% to 98%.

Element No. 2: Ariane-EyeLib. This workstation obtained the CE Mark in September 2020 and is undergoing further clinical evaluation before its European market launch. The platform uses biometric morphometric sensors and a combination of optoelectronic devices to automatically obtain about 100 distinct eye measurements that cover approximately 90% of the objective signs produced by conventional ophthalmology examinations, including frontofocometry, refractometry, wavefront aberrometry, tonometry, corneal topography, pachymetry, retinography, optical biometry, anterior and posterior OCT, lens transparency, tear film analysis, and endothelial specular microscopy. This fully automated cycle is performed in less than 5 minutes.

Element No. 3: Ariane-SmartVision Report. This comprehensive report synthesizes the subjective and objective data collected from the chatbot and the station. It uses AI and supervised machine learning to calculate the power of optical corrections for glasses, contact lenses, and implants. The software uses anatomical and optical information, suggests which actions should be taken (eg, eligibility for refractive surgery, degree of urgency for a specialist consultation, optimization of medical follow-up), and geolocates specialist practitioners. In early clinical testing, the Ariane-SmartVision Report AI–powered algorithms improved the prediction of subjective refraction and IOL performance from a range of 70% to 75% to a range of 80% to 92% within ±0.25 D (data on file with Mikajaki).

CONCLUSION

The creation of medical intelligence should profoundly advance our ability to identify new markers and syndromic associations and strengthen our predictive capacity. It should also provide patients with the tools they need to advocate for their own eye health.

One day in the near future, augmented cerebral practitioners may become a reality, and it is my hope that—with effective decision-making tools—our sensibility, empathy, self-sacrifice, integrity, and respect for others will remain at the epicenter of patient care.

1. Cost of vision problems: the economic burden of vision and eye disorders in the United States. Prevent Blindness. June 11, 2013. Accessed February 5, 2021. https://preventblindness.org/wp-content/uploads/2020/04/Economic-Burden-of-Vision-Final-Report_130611_0.pdf

2. World report on vision. World Health Organization. October 8, 2019. Accessed February 16, 2021. https://www.who.int/publications/i/item/world-report-on-vision

3. Du XL, Li WB, Hu BJ. Application of artificial intelligence in ophthalmology. Int J Ophthalmol. 2018;11(9):1555-1561.

The Impact of Big Data on IOL Power Calculation

Damien Gatinel, MD, PhD

AI is poised to play a significant role in improving the accuracy and performance of IOL calculation formulas, especially for nonaverage eyes such as a long eye with a flat cornea and nonvirgin eyes. Today, work is being done to integrate AI with automated refraction devices, which will provide the framework to optimize IOL formulas.

BACKGROUND

The five main types of IOL calculation formulas are historical vergence (Hoffer Q [HQ], SRK/T, Holladay I, Haigis), improved vergence (Barrett Universal II, Holladay II, Emmetropia Verifying Optical, Wang/Koch), ray tracing, pure AI (Hill-Radial Basis Function [RBF] 2.0), and hybrid methods (Kane; Prediction Enhanced by Artificial Intelligence and Output Linearization-Debellemaniére, Gatinel, Saad [PEARL-DGS]; Super Ladas). Interestingly, no IOL calculation formula that incorporates AI or its details has been published in the literature.

In 1997, researchers first attempted to use a supervised learning method to calculate IOL power without the incorporation of key data (ie, anterior chamber depth and lens thickness).1 At that time, the drawbacks of the method included the ability to compute nonlinear regression of any mathematical forms, the absence of assumptions about the underlying form of the data on one side, and the requirements in computer power and memory on the other side. Even with a small training set of 150 eyes, however, the results of this initial research showed promise for neural network–based methods, and the proposed formula outperformed the Holladay I even without the input of anterior chamber depth and lens thickness.

PROMISING DEVELOPMENTS

Backpropagation. In one proof-of-concept study of more than 15,000 eyes, neural network architecture was tested on various data sets built with eyes that had an axial length shorter or longer than 22 mm.2 These results led to the development of the backpropagation algorithm for predicting IOL power.

Hill-RBF 2.0. The first AI-based formula for commercial use is the Hill-RBF. It uses AI for pattern recognition and incorporates weighted functions that serve as interpolants of unstructured data in high-dimensional spaces. This formula is purely driven by data and therefore does not require the prediction of effective lens position and vergence. The Hill-RBF was developed using data on more than 12,000 eyes collected from the Lenstar biometer (Haag-Streit) and implanted with an SN60WL IOL (Alcon). The formula is capable of finding distinct patterns in the middle of random cloud data points, may be generalized to other biometers and biconvex and meniscus IOL designs, and includes a function to detect outliers when the calculator is likely to be out of bounds.

Super Ladas. This formula uses AI and big data to refine the calculations of five existing thin lens formulas (HQ, Holladay I, Holladay I with Koch adjustment, Haigis, and SRK/T). It relies on nonlinear regression modeling and 3D surface plotting to improve the predictions. The formula indicates the areas of nuance where the formulas are similar and different. We can understand this formula as a sophisticated SRK approach that is applied to already known layers of biometric formulas.

Kane. The Kane formula was created using large data sets from selected high-volume surgeons that include approximately 30,000 eyes. It uses a combination of theoretical optics, thin lens formulas, and big data techniques to make its predictions and takes into account the variation of the main optical planes according to the design and the power range of an IOL.

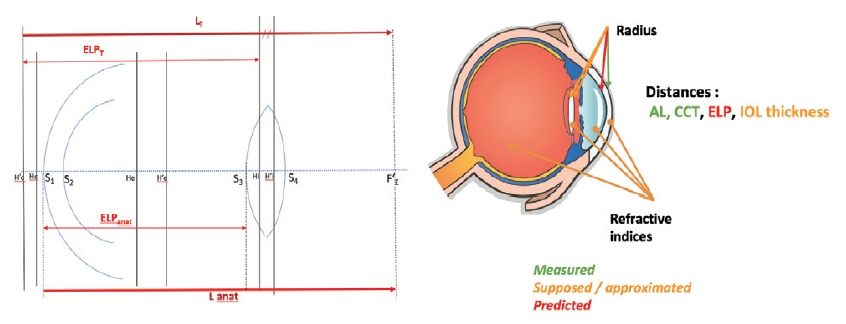

PEARL-DGS. My colleagues and I have developed a hybrid AI thick lens formula that uses two algorithms to improve the values of approximated or predicted data, such as ELP, the posterior corneal radius, and the refractive indices of different ocular segments (Figure 5).

Figure 5. An overview of the PEARL-DGS formula.

DISCUSSION AND CONCLUSION

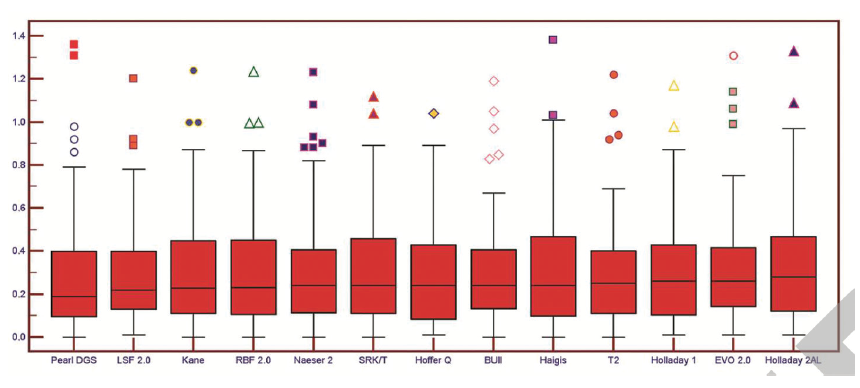

Recent studies suggest that AI and hybrid AI IOL calculation methods may provide equivalent or slightly better predictability compared with modern IOL formulas such as Olsen, Holladay II, and Barrett Universal II.3-5 In one study, with both groups of formulas, 70% of eyes were within ±0.50 D of prediction error. In another study comparing AI-based formulas (Hill-RBF 2.0, Kane, and PEARL-DGS) to Gaussian optics–based standard formulas,4 the AI formulas systematically ranked second and, generally, in the top five in prediction error in short, medium, medium-long, and long eyes. Lastly, a study using Scheimpflug and partial coherence interferometry showed that the current AI-based formulas occupy the first four places in the ranking (Figure 6). The most recent formulas achieved a prediction error within ±0.50 D in more than 85% of eyes.

Figure 6. IOL power calculation formulas with a prediction error within ±0.50 D.

Figures 5 and 6 courtesy of Damien Gatinel, MD, PhD

There is a clear tendency toward better outcomes with AI- and big data–based IOL calculation formulas, although the differences are small compared to traditional formulas. To get ahead, AI formulas will have to handle other parameters such as IOL design or adjustments in refractive index values. In the future, the ecosystem of IOL power calculation and biometry will have to be revised to include large database connections and enable cloud computing.

1. Clarke GP, Burmeister J. Comparison of intraocular lens computations using a neural network versus the Holladay formula. J Cataract Refract Surg. 1997;23:1585-1589.

2. Fernández-Álvarez JC, Hernández-Lópes I, Cruz-Cobas PP, Cárdenas-Díaz T, Batista-Leyva A. Usinga multilayer perceptron in intraocular lens power calculation. J Cataract Refract Surg. 2019;45(12):1753-1761.

3. Darcy K, Gunn D, Tavassoli S, Sparrow J, Kane JX. Assessment of the accuracy of new and updated intraocular lens power calculation formulas in 10 930 eyes from the UK National Health Service. J Cataract Refract Surg. 2020;46(1):2-7.

4. Cheng H, Kane JX, Liu L, Li J, Cheng B, Wu M. Refractive predictability using the IOLMaster 700 and artificial intelligence-based IOL power formulas compared to standard formulas. J Refract Surg. 2020;36(7):466-472.

5. Taroni L, Hoffer KJ, Barboni P, Schiano-Lomoriello D, Savini G. Outcomes of IOL power calculation using measurements by a rotating Scheimpflug camera combined with partial coherence interferometry. J Cataract Refract Surg. 2020;46(12):1618-1623.

V2Rx Smart VR Phoropter

Bart Jaeken, PhD

V2Rx (Timiak Tech) is a miniature optical cabinet and virtual reality headset (Figure 7) that is designed to detect the visual performance and the optical quality of the eye being tested. The device monitors the behavior of the eye with high-frequency eye tracking and wavefront sensing, and it fully controls the test conditions to optimize the virtual test environment. The V2Rx also has a small footprint.

Figure 7. The V2Rx optical cabinet and virtual reality headset.

The patient’s profile and the behavior of the eye are taken into account during testing. The V2Rx uses AI to process the data obtained by its sensors and produces information about the current state of the eye with respect to its dynamic range of vision. A number of diagnostic tests can be performed with the V2Rx, including the basic measurements of refractive error, visual acuity, contrast sensitivity, aberrometry, and pupillometry, and advanced diagnostics such as the defocus curve, vergence deficits, and accommodative response.

The entire diagnostic examination is quantified in metrics, and a detailed map of the state of the patient’s vision is generated. This information is used to train the algorithms to expand their potential to detect pathologies earlier in the disease state. Because the V2Rx is controlled remotely, the doctor and patient need not be in the same room during testing (Figure 8).

Figure 8. The device has a small footprint.

Figures 7 and 8 courtesy of Bart Jaeken, PhD

CURRENT STATUS

The V2Rx is in the first phase of clinical validation. My colleagues and I hope to announce the commercial launch of the device in early 2023. The unit will connect to the digital vision care platform Nice2SeeU (Timiak Tech), designed to empower patients to preserve their vision. We plan to launch that platform this spring.

The Hoffer QST: Improving the Formula With AI

Kenneth J. Hoffer, MD, FACS; Giacomo Savini, MD; and Leonardo Taroni, MD

Vergence formulas have been the gold standard for IOL power calculation for more than 40 years.1-4 The HQ formula has been the benchmark for comparison, especially in hyperopic eyes, since published research showed the HQ to be the most accurate option when axial length (AL) is shorter than 22.0 mm.5,6 Recent studies have found that new formulas offer greater accuracy than the HQ and other traditional vergence formulas. These findings prompted us to investigate and attempt to overcome the HQ’s limitations.

TWO LIMITATIONS AND SUGGESTED IMPROVEMENTS

Limitation No. 1. The HQ tends to overestimate the IOL power in eyes with shallow anterior chambers and to underestimate it in eyes with deep anterior chambers.7,8 The results of this limitation are myopic and hyperopic surprises, respectively.

Limitation No. 2. The performance of the HQ is weak in eyes longer than 26.0 mm, and the formula tends to produce hyperopic outcomes.

Improvements. The solution to these limitations lay with machine learning, a kind of AI that may provide a nonlinear regression model.

The first step was to decide which elements of the HQ merited updating. The ELP is one main contributor to errors in IOL power calculation using modern biometry.9 We evaluated 537 eyes that received the same monofocal IOL and achieved highly accurate refractive outcomes after cataract surgery and zeroed their prediction errors (PEs) by optimizing the ELP. By subtracting the HQ constant (postoperative anterior chamber depth, pACD) from the optimized ELP, we found a new ELP correcting factor (T-factor) for each eye.

Maintaining the same pACD value of the HQ allowed us to calculate the new ELP equation using an accessible constant for every IOL such as those published on the User Group for Laser Interference Biometry or IOLCON websites. At this point, using machine learning, we created a model that uses the sex and biometric data (eg, AL, average keratometry reading, ACD, corneal radius) as input to calculate the T-factor. Other biometric parameters that did not improve ELP prediction were not included in the model.

As a second step, we developed a customized AL adjustment for long eyes following the same method adopted for the T-factor. Briefly, we zeroed the PEs of approximately 200 long eyes (AL > 25.0 mm) to optimize the AL. After determining the AL adjustment from the difference between the original AL and the optimized AL, we developed a nonlinear model to estimate it.

CONCLUSION

We updated the HQ by means of new algorithms and machine learning to create the Hoffer Q/Savini/Taroni formula (Hoffer QST).

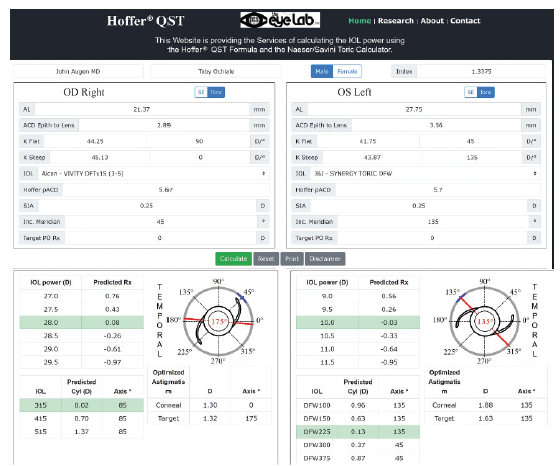

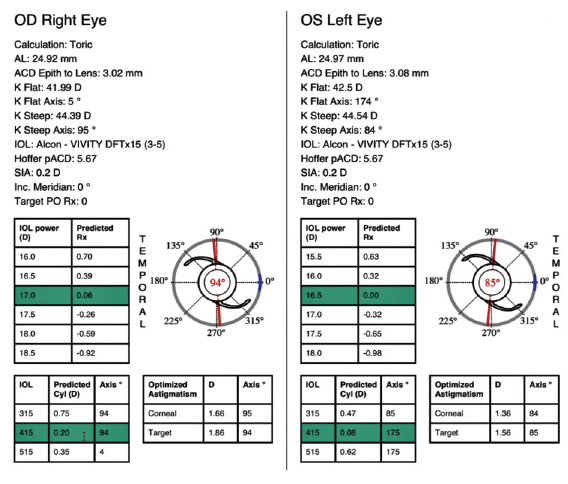

The Hoffer QST calculator (Figure 9) is available for free at www.HofferQST.com and at www.EyeLab.com. The Naeser/Savini Toric calculator with a complete printout (Figure 10) for patient charts or electronic medical records is included. A research section at the top allows users to download specific spreadsheets to populate with their data, upload the completed spreadsheet to the site, and receive multiple simultaneous calculations or Hoffer QST lens constant (pACD) optimization.

Figure 9. The Hoffer QST with toric calculation.

Figure 10. An example of a Hoffer QST printout.

Figures 9 and 10 courtesy of Giacomo Savini, MD

Based on our results to date, IOL power calculations with the Hoffer QST appear to be equally or more accurate (depending on the parameter measured) than the most accurate formulas available today. We recently submitted our clinical results for publication.

1. Haigis W, Lege B, Miller N, Schneider B. Comparison of immersion ultrasound biometry and partial coherence interferometry for intraocular lens calculation according to Haigis. Graefes Arch Clin Exp Ophthalmol. 2000;238(9):765-773.

2. Hoffer KJ. The Hoffer Q formula: a comparison of theoretic and regression formulas. J Cataract Refract Surg. 1993;19(6):700-712 errata 1994;20(6):677, 2007;33(1):2-3.

3. Holladay JT, Prager TC, Chandler TY, Musgrove KH, Lewis JW, Ruiz RS. A three-part system for refining intraocular lens power calculations. J Cataract Refract Surg. 1988;14(1):17-24.

4. Retzlaff JA, Sanders DR, Kraff MC. Development of the SRK/T intraocular lens power calculation formula. J Cataract Refract Surg. 1990;16(3):333-340.

5. Hoffer KJ. Clinical results using the Holladay 2 intraocular lens power formula. J Cataract Refract Surg. 2000;26(8):1233-1237.

6. Aristodemou P, Knox Cartwright NE, Sparrow JM, Johnston RL. Formula choice: Hoffer Q, Holladay 1, or SRK/T and refractive outcomes in 8108 eyes after cataract surgery with biometry by partial coherence interferometry. J Cataract Refract Surg. 2011;37(1):63-71.

7. Eom Y, Kang SY, Song JS, Kim YY, Kim HM. Comparison of Hoffer Q and Haigis formulae for intraocular lens power calculation according to the anterior chamber depth in short eyes. Am J Ophthalmol. 2014;157(4):818-824.

8. Melles RB, Holladay JT, Chang WJ. Accuracy of intraocular lens calculation formulas. Ophthalmology. 2018;125(2):169-178.

9. Norrby S. Sources of error in intraocular lens power calculation. J Cataract Refract Surg. 2008;34(3):368-376.

Embracing Teleophthalmology

Robert P.L. Wisse, MD, PhD

At the recent 2020 ESCRS Virtual Meeting, I delivered an update on the latest developments in telemedicine.1 I reported that COVID-19 sparked a paradigm shift toward remote care, where technology is used to deliver appropriate care and retain continuity when physical access to health care is limited. The importance of high-quality telehealth became clear during the first months of the pandemic, but its utility will not end when COVID-19 is brought under control. A change in eye care delivery is required to tackle the demands of an aging society.

E-HEALTH TOOLS

Electronic health (e-health) technology has the ability to increase global access to eye care. These tools enable laypeople to measure aspects of their own visual function in personal settings through the use of smartphones, tablets, and computers.

A plethora of e-health tools for visual function testing are available on the internet and in mobile app stores,2 but extensive validation research and certification are required before a digital self-testing tool can be implemented in clinical practice. The University Medical Center (UMC) Utrecht is collaborating with Easee, a medical technology company based in Amsterdam. Easee was the first to develop an EU-certified CE-1m digital tool for the self-assessment of visual acuity and refractive errors. Since March 2020, this tool has been valuable for a program aimed at remotely triaging medical emergencies and rescheduling appointments.

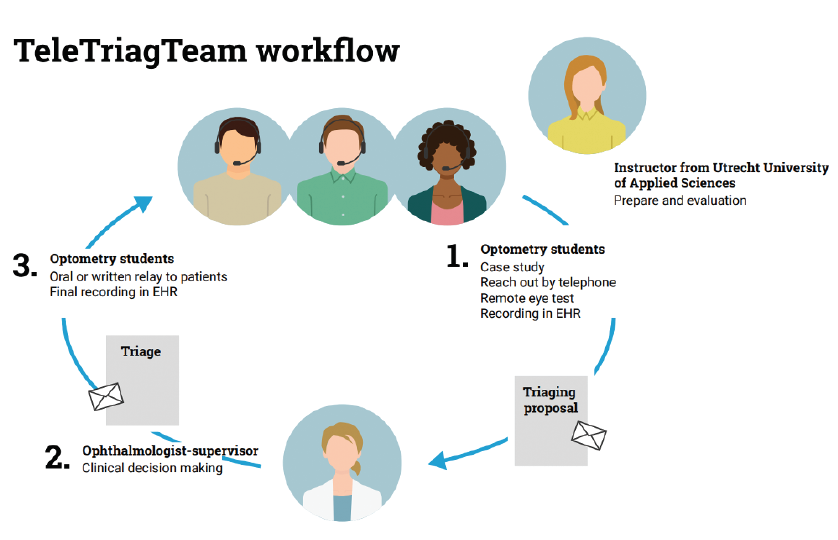

This ongoing collaboration between the Utrecht University of Applied Sciences and the UMC Utrecht is dubbed the TeleTriageTeam. As part of this program, optometry students contact patients to assess whether remote eye testing is indicated and whether patients are capable of performing it (Figure 11). Clinical educators guide the process, and in accordance with Dutch law, the supervising ophthalmologist finalizes the clinical decisions. Everything is recorded in an electronic medical record system and relayed to patients and their general practitioners or external ophthalmologists.

Figure 11. Schematic of the workflow used by TeleTriageTeam.

Courtesy of Robert P.L. Wisse, MD, PhD

TACKLING THE BACKLOG OF APPOINTMENTS

The main lesson to be learned from the TeleTriageTeam is that an open mind to health care innovation is vital to adjust to a rapidly changing environment. This program has facilitated a drastic reduction in deferred care by tackling an enormous backlog in outpatient appointments. More than 50% of the appointments were either converted safely to a teleconsultation or postponed and referred to regional professionals. To date, more than 3,000 patients have participated in the program.

The hospital actively enables the online eye testing modules, potentially giving all of UMC Utrecht’s patients access to this novel technology. The actual uptake of the remote eye test, however, has been significantly lower. We are evaluating contributing factors, but it appears that a lack of digital skills and trust in technology among professionals and patients plays a major role.

This partnership between academia and Easee has been mutually beneficial.3 Remote eye examinations have been integrated into the hospital’s electronic medical record system as a COVID-19 relief action. At the same time, staff at the UMC Utrecht has performed validation studies of the remote eye examination in various populations, including healthy young individuals4 and patients with keratoconus and uveitis.

LOOKING AHEAD

The future of telemedicine in ophthalmology is bright. Easee recently also announced it is listed as class 1 510k exempt by the FDA. The necessary technology is coming of age, and governments, insurers, health care providers, and the general public are becoming more accepting of telehealth. The greatest challenges at present are integrating new technology into existing systems and maximizing inclusivity. These tools should not be reserved for tech-savvy first-adopters but rather aimed at typical ophthalmic patients, who tend to be somewhat older, whose vision is often compromised, and who are often less technologically adept.

Our next goal is to investigate the clinical validity and relevance of upcoming e-health solutions in ophthalmology with our Digital Eye Health research consortium, which will have relevance both for medical settings and e-commerce retail.

1. Wisse R. Digital eye testing in cataract and refractive care: telemonitoring as a paradigm shift. Paper presented at the: 38th Congress of the ESCRS Virtual Meeting; October 2-4, 2020.

2. Yeung WK, Dawes P, Pye A, Neil M, Aslam T, Dickinson C, Leroi I. eHealth tools for the self-testing of visual acuity: a scoping review. NPJ Digit Med. 2019 22;2:82.

3. Digital eye health; towards remote monitoring in eyecare. Health Holland. Accessed February 2, 2021. https://www.health-holland.com/project/2020/digital-eye-health-towards-remote-monitoring-in-eyecare

4. Wisse RPL, Muijzer MB, Cassano F, Godefrooij DA, Prevoo YFDM, Soeters N. Validation of an independent web-based tool for measuring visual acuity and refractive error (the Manifest versus Online Refractive Evaluation Trial): prospective open-label noninferiority clinical trial. J Med Internet Res. 2019;21(11):e14808.